Depth image

In addition to the input RGB image (selfie), a depth image can also be provided. A depth image represents, for each pixel, the distance to the camera. Greater didimo likeness can be achieved by providing this extra data.

The support has been tested with Apple depth images.

The depth image has the following requirements:

- PNG format

- single channel (gray color space)

- each pixel is represented with an unsigned int (16 bit)

- units are in millimeters

- must have the same aspect ratio as the input RGB photo

- must not have orientation correction

Below is an example on how to implement this on iOS, with swift. The photo variable is of type AVCapturePhoto.

if var avDepthData = photo.depthData {

if (avDepthData.depthDataType != kCVPixelFormatType_DepthFloat16) {

avDepthData = avDepthData.converting(toDepthDataType: kCVPixelFormatType_DepthFloat16)

}

let depthFrame = avDepthData.depthDataMap

let width = CVPixelBufferGetWidth(depthFrame)

let height = CVPixelBufferGetHeight(depthFrame)

let bytesPerRow = CVPixelBufferGetBytesPerRow(depthFrame)

var pixelBuffer: CVPixelBuffer? = nil

let cvReturn = CVPixelBufferCreate(kCFAllocatorDefault, width, height, kCVPixelFormatType_OneComponent16, nil, &pixelBuffer)

if cvReturn != kCVReturnSuccess{

print ("Error creating CVPixelBuffer")

}

// Lock pixelBuffer for writting

CVPixelBufferLockBaseAddress(pixelBuffer!, CVPixelBufferLockFlags(rawValue: 0))

CVPixelBufferLockBaseAddress(depthFrame, .readOnly)

let baseAddressIn = CVPixelBufferGetBaseAddress(depthFrame)!

let baseAddressOut = CVPixelBufferGetBaseAddress(pixelBuffer!)!

let byteSizeOut = MemoryLayout<UInt16>.size

for x in 0..<width {

for y in 0..<height {

let rowData = baseAddressIn + (y * bytesPerRow)

var pixelValue = Double(rowData.assumingMemoryBound(to: Float16.self)[x])

// Convert the depth data units to mm

if pixelValue.isNaN {

pixelValue = 0

} else {

pixelValue *= 1000.0

}

let pixelValueOut = UInt16(min(Double(UInt16.max), pixelValue))

baseAddressOut.storeBytes(of: pixelValueOut,

toByteOffset: (x * byteSizeOut) + (bytesPerRow * y),

as: UInt16.self)

}

}

CVPixelBufferUnlockBaseAddress(pixelBuffer!, CVPixelBufferLockFlags(rawValue: 0))

CVPixelBufferUnlockBaseAddress(depthFrame, .readOnly)

let colorSpace = CGColorSpace(name: CGColorSpace.extendedGray)!

let ciImage = CIImage.init(cvPixelBuffer: pixelBuffer!)

let ciContext = CIContext()

depthData = ciContext.pngRepresentation(of: ciImage, format: CIFormat.L16, colorSpace: colorSpace, options: [:])

}For more information on how to capture an image with depth on an iPhone, please visit the official documentation. Make sure you capture depth with the TrueDepth camera.

Sample files

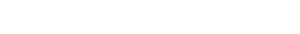

The following files represent an example of a RGB photo and its corresponding depth data.

They can be used to test our service.

Updated 9 months ago